To test my Aceproxy, i posted the link to a particular subreddit to get some traffic generated. One of the main issues i saw with the proxy (excluding being reliant on the acestream source) was that end users and the Acestream swarm was both battling for the available bandwidth. This caused drops for users. This is a quick rundown how i managed to split the servers that Acestream was using and the end users.

This run through assumes you have already setup the Acestream proxy previously (see HEREif not)

Also, this requires 2 servers, instead of just the one.

From now on, ill be refering to 2 server:

server1 – Acestream “backend”.

server2 – the server end users will connect to.

This is actually quite simple. Its just a case of setting up the 2 servers to do different roles.

Server1

All server1 is doing is running the Acestream-engine. Thats it. This server just deals with the P2P traffic.

The only change from how we previously started the acestream-engine, is an additional argument, like the following:

1

jon@stream-origin:~$ acestream-engine --client-console --bind-all

Thats it for server1.

Server2

Here is where the bigger config changes are.

In regards to what needs to be installed, just follow the previous install steps (HERE) but dont install the Acestream-engine.

In the aceconfig.py file, set the “acehost” parameter to the IP of server1

1

acehost = 'SER.VER.1.IP'

Thats really all that should need changing.

Now we just need to start the relevant services on each server.

Start the streams

Server1 –

1

jon@stream-origin:~$ acestream-engine --client-console --bind-all

Server2 –

1

jon@stream-origin:~$ vlc -I telnet -clock-jitter -1 -network-caching -1 -sout-mux-caching 2000 -telnet-password admin

1

jon@stream-origin:~$ python acehttp.py

That should be it. Now you go through the same procedure to view a stream as you did before, just using “server2” ip.

For those that are interested, here are some snmp graphs showing the difference this setup makes.

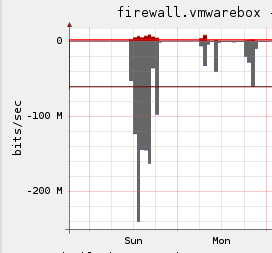

Before the split of services

Unfortunately i didnt grab the graph from cacti with the services all on one server, though i do have the historical bandwidth graph from the firewall:

You can see that having both the acestream swarm and end users on this machine hit over 200Mbps. This lasted over an hour. There was some big games last weekend so its to be expected.

You can see that having both the acestream swarm and end users on this machine hit over 200Mbps. This lasted over an hour. There was some big games last weekend so its to be expected.

After the change

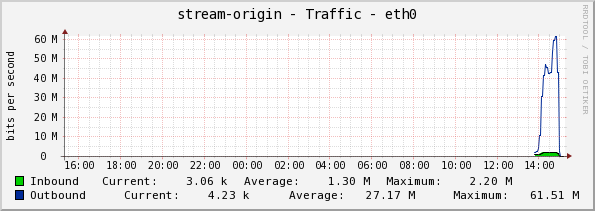

Now we have some better graphs for todays stats.

First we have the acestream server itself. Almost all of the outgoing traffic is the acestream swarm, a tiny fraction of it is the stream the proxy. That is visible on the server the end users hit as its the only traffic classed incoming.

Server1 – acestream-engine

The inbound here is the video we want to be watching.

The inbound here is the video we want to be watching.

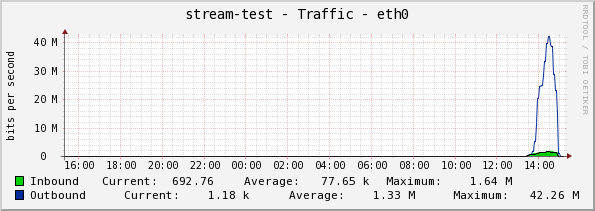

Server2 – aceproxy

This is the server that is actually sending streams out to end users.

As you can see here, the green is incoming, thats the video from server1. The blue is outgoing, thats the video going out to end users.

As you can see here, the green is incoming, thats the video from server1. The blue is outgoing, thats the video going out to end users.

The beauty of this setup is adding more servers to accommodate more users will only add a tiny load to the acestream-enginer server, yet giving you a massive boost in outgoing bandwidth.